Modern Forth Compiler Benchmarks

Background

There was thread last month in the usenet group comp.lang.forth named "What are my options for dictionary hashing?" There were many comments about what hashing options would provide the most effient and fastest dictionary searches in Forth. Statistics were given showing how deep average searches had to go using different options.

I made one comment in the thread saying that not all Forth "words" are words in the dictionary because there are also numbers in Forth source code. Most systems search the dictionary when they encounter a word and if they don't find a match in the Forth dictionary they try to interpret it as a number. This will effect the average search depth and search time since the dictionary is searched all the way when something isn't there. So the percent of numbers in Forth source increases the average search depth. The amount of increase depends on the percent of numbers in the source code.

I have certainly written Forth applications that had a lot of numbers in them. Marcel Hendrix posted the percentage of numbers in a group of Forth source files that he distributes and many of them were close to 50% numbers and one was 80% numbers.

People don't add things like hashes to their dictionary search for no reason. They want to improve the Forth system performance. The fact that there were 353 posts to the thread about using hashes in a Forth dictionary showed that many people are interested in improving the performance of Forth systems.

It was said recently that I might be out of touch with modern Forth comilers. I considered that perhaps that was true and as I have mostly worked with only a few Forth systems for the last decade. So I thought I should see what is out there today. Whatever the term modern Forth is suppose to mean the meaning must change over time.

In 1980 I had several computers with 1MHz clocks and fifty to one hundred machine cycles typical for a threaded Forth primitive. Compilation ran slowly from slow floppies. The largest programs I compiled back then were less than 64KB and they still took several minutes to compile as many will remember. I remember 1980 Forth compiles fondly as they were so much faster than the alternatives at the time. Other compilers were larger and left less free memory and used the slow floppies a lot more due to a DOS being present and file uee.

Some people today are still writing the same sized applications or for the same processors people used in 1980 which is fine but hardly seems like it should qualify as modern Forth to me. Some people are still writing the same sized applications for small embedded systems and that's understandable. But PCs today have a lot more memory and are a lot faster than the ones we used in 1980 and people do larger jobs because of that. Using cross compilers and tethered Forth is a modern practice but doesn't reflect the practice of writing PC sized applications in modern Forth on modern PCs themselves.

Programmers in other languages may not understand what Forth compilers do. One of the things that distinguishes Forth from a lot of other languages is that we often write applications where the Forth compiler is embedded in the Forth application and is called by the application. In many other languages compilation does not happen as often as in Forth. Compilation may need multiple passes over larger source files than Forth so there are tools like "make" to avoid compiling files in a project except those that have been changed since the last compile. The intermediate results of the compile are stored in object files to avoid compiling next time. Most people it seems don't even know how long compiles take because they avoid them.

But in Forth we compile a lot. It is one pass compilation and fast in comparison. Because we do compilation more often it is an important thing to us and we want to make it efficient. More importantly we may do compilation inside of our applications so compiler performance matters more than for people who do it rarely, avoid it as much as they can, and don't include their compiler in their applications. To Forth programmers compiler performance matters.

We should also note that Forth does both interpretation and compilation when compiling. Well written Forth will have interpreted phrases both between definitions and inside of definitions. This was something missing from Chuck's sourceless programming era and one reason he returned to Forth, so source code could express how certain values were calculated at compile time by interpreting source code.

Forth programs may extend the compiler with new compiling words then execute those words to compile more. They may compile data strucutures and code to link them together or examine the data structures just compiled and extract parameters to compile more data structures and code.

Rational

What can be measured with compiler performance benchmarks? It is very easy to write benchmarks that measure specific and simple things like how quickly a compiler can compile numbers or how quickly it processes comments in the source code. A benchmark to measure overall compiler performance has to reflect some mix of what Forth code does when it is compiling.

Extremely large Forth programs are very rare because of the way Forth naturally compresses the description of what a program does. There are exceptions where people talk about gigantic Forth programs. I have never worked with one myself.

The sort of programs I have worked with exercise the compiler and compile images into memory and execute quite a lot of Forth code while compiling a large linked data image. They execute code to manipulate the data image and compile more code and more data. Twenty years ago these things were only as large as a typical Windows Forth system today. They were on the scale of things an F21 could do. Ten years later the compiler had to do more than twenty times more work and this trend continued to today and will probably continue on into the future. So I wrote a he third benchmark that compiles data while executing Forth code for different sized images.

Knowing the profile of a real app I wrote a fairly simple benchmark with a similar mix of Forth calculation and Forth compilation mixed together. I tried to be conservative and was pleased to see that when one of the participants, Win32Forth compiled itself it was fairly close to the benchmark I had written to compile and image of a similar size.

What particular interest me is that such a test for a Forth compiler is testing the overall mix of compiler routines and compiled code. It is a crude way to get a sense of how different modern Forth compilers would perform compiling large modern Forth applications similar to the OKAD suite of programs. The programs themselves are not very large but the amount of execution and compilation that they require is fairly large.

There is quite a huge range of design techniques used in the design of the different ANS Forth systems tested. Because the standard is about the behavior of what standard words to implementors have the freedom to use different implementation techniques in the compiler. They have different dictionary structures and search mechanisms, different threading mechanisms, registers assignments, and optimization methods.

In addition to the range of ANS Forth I tested I began with the original benchmark on colorforth where the OKAD application it was modeled on runs. It has a lot of different tradeoff than the ANS Forth systems. The things it does should give it an advantage on the simple benchmarks where number compilation and comment compilation are timed.

The big benchmark measures a mix of compilation and execution and colorforth has one of the most simple compilers that does simple subroutine threading with a number of Forth primitives inlined in native code. It has tail-recursion but no other code optimization at all. It works like compilers for tiny simple Forth machine targets but the IA32 processors are complex and require complex code optimization to produce high performance code. The colorforth compiler does not seem like it should produce very high performace code for this complex processor target since its compiler is really no more complex than the smallest and simplest threaded ANS Forth in the benchmark. I was particularly currios on where it would fit into the range of modern Forth compilers. Some of the modern ANS Forth compilers employ much more complex code optimiztion to produce higher performance code. Compilers with more effective optimizers should produce code that performs the execution while compiling part of the benchmark faster than system with less code optimization.

One benchmark tests Forth compilers speed at compiling from souce that is half numbers. One test has a profile much like a significant Forth application, a program that Chuck Moore says is the best example of what Forth can do that he has ever seen, OKAD II. From a relatively small amount of Forth source code we compile very large images. To me this is one of the things that distinguishes modern Forth on PCs from other environments and from classic Forth on small machines. The third benchmark just measures the speed of compiling comments.

A native code Forth should execute Forth code faster than a threaded Forth on the same machine. Optimizing native code Forth tend to be an order of magnitude faster than older threaded code systems. But an optimizing compiler may also need more steps to do compilation than a simpler compiler. Having faster code themselves they may be able to to do more steps in the same amount of compile time, but the two things might cancel each other out in some cases. I had never tried testing it before nor had I ever seen anyone else ever tests this important aspect of Forth.

I wrote a benchmark with a similar profile and tweaked its variables that determine how much computation is done during compilation until the benchmark results matched the real application very closely on the same Forth compiler and same machine. Then I tried the small number compiling and large application compilation benchmarks on several modern Forth compilers to see what happened.

By 1990 PCs were a lot bigger and faster than they had been in 1980 so people were compiling larger applications. Some things were even about the size of some modern Forth compilers today. Many of the modern Forth compilers I downloaded from the Internet were more than a few megabytes in size.

By 2000 PCs were bigger and faster than they had been in 1990 and people were compiling larger applications again. This trend continued on to 2010 and I expect it to continue in the future for modern Forth.

Some people claim instantaneous compiles or talk about some mythical 1/4-second rule that says compiles below 1/4-second are ok. That sounds like nice marketing buzzwords but I was not quite ready to believe that modern Forth compilers compiling modern Forth applications on modern machines are "instantaneous" It was my experience that they are not.

There are plenty of benchmarks for lots applications with different profiles. Mostly they are used so that people can see how well a given compiler optimizes a certain type of application code.But I have never seen a benchmark with a profile of a real application that makes extensive use of the Forth compiler in the application.

I noted that over time the term modern would have meant different things and certainly it must be defined by the size of what could be done reasonably at any given time in history as machines and Forth evolved. To chart this history I scaled the CAD profile benchmark to produce images of the same scale as applications the Forth programmers I saw were doing from 1980, 1990, 2000, and 2010. I wrote one benchmark to measure the speed that the compilers compiled numbers, one to measure the speed at which they compile modern programs that push a compiler very hard, and one to measure the speed that they can compile comments.

Listed below are eleven Forth systems that I see people recommending and the time needed to compile applications from three different eras. I used versions of Forth available for free downloads and that could be downloaded and installed within an hour.

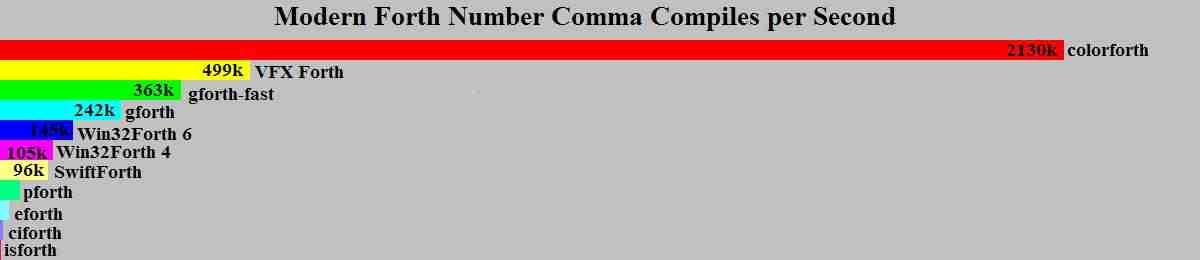

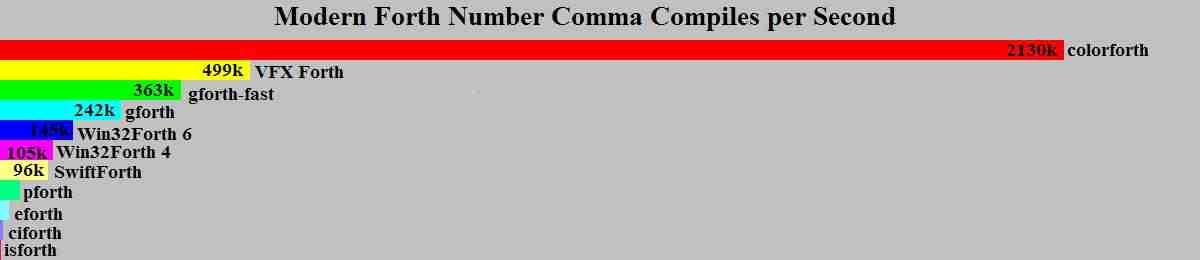

The first benchmark was very simple for the programs that are fifty percent numbers. I simply measures the speed at which systems compile a number and a comma from source. I don't know if the last few are considered modern by everyone but there are people advocating their use today.

small benchmark number of "FFFFFEDC ," compiled per second Forth % of colorforth performance colorforth v3.4m0 10/2010 2130k 100.0% VFX Forth 4.41 9/1/2010 499k 23.4% gForth-fast 0.7.0 2008 363k 17.0% gForth 0.7.0 2008 242k 11.3% Win32Forth v6.14.0 build 2 145k 6.8% Win32Forth 4.2 105k 4.9% SwiftForth 3.2.2 08-jul-2010 96k 4.5% pForth v21 (C) 19k 0.9% eForth32 v2.0 RVN 1990 18K 0.8% ciForth 4.0.6 5k 0.2% isforth 1.8k 0.08%

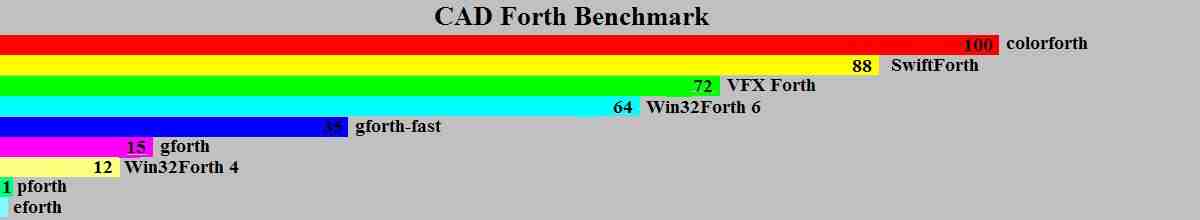

Modern Forth CAD Benchmark

I also tried a benchmark with the profile of a modern app, but that compiled to under 64KB worth of code and structures in SwiftForth on my 2GHz machine. An application the size of the ones we used in 1980 compiled in 79ms in SwiftForth. That's well under the 1/4-second rule. But I don't think solving 1980 problems in 2010 on new machines is really what the term "modern Forth" is suppose to mean.

To save time I ran the 1990 scale test on all of the Forth tested and the 2000 and 2010 scale tests on the faster ones. On the slower ones I just scaled the result for the column.

results of the BIG benchmark matching OKAD 2 application scale from year 1990 2000 2010 Forth times in seconds % of colorforth performance colorforth v3.4m0 2010 12.8 302 1838 100% SwiftForth 3.2.2 08-jul-2010 14.5 339 2080 88% VFX Forth 4.41 17.8 417 2551 72% Win32Forth v6.14.0 build 2 20 470 2870 64% 16 sec system compile on install gforth-fast 0.7.0 36.7 862 5280 35% gforth 0.7.0 83.5 1962 11982 15.3% Win32Forth v4.2 2000 85 1997 12197 12% pforth v21 1990 (C) 1025 24087 147087 1.3% eforth32 v2.0 2978 70006 430465 0.4%

I have been thinking about how aha was designed to take advantage of the F21's code density and simplicity and thinking about an aha2 for GreenArrays or IA32. One would think a processor on the scale of a 6502 or 8051 without memory cache or pipelining would have terrible performance using 200MHz memory when compared to a 2GHz PC on any benchmark. Since F21 has a 20-bit memory bus it is doing fewer bits per word than a IA32 processor so at the bit level is it only getting five eights the performance of a 32-bit PC at the same speed as a modern 2GHz PC.

Because it treats all source with tags that work in a similar way to the way numbers work in colorforth its performance exceeds that of modern Forth for the PC. At 13100k aha achieves about six times the performance of colorforth on the number compile benchmark

Forth numbers ,/sec % of aha performance aha forth x0 13100k 100.00% colorforth v3.4m0 10/2010 2130k 16.25% VFX Forth 4.41 9/1/2010 499k 3.8% gForth-fast 0.7.0 2008 363k 2.7% gForth 0.7.0 2008 242k 1.8% Win32Forth v6.14.0 build 2 145k 1.1% Win32Forth 4.2 105k 0.8% SwiftForth 3.2.2 08-jul-2010 96k 0.7% pForth v21 19k 0.14% eForth32 v2.0 1990 18K 0.14% ciForth 4.0.6 1995 5k 0.04%

A Few Comments

Comments are a useful thing in programs but they do have penalties. They pull a reader's attention away from the code and they have to be processed by the compiler. Still some programs need and get a lot of comments.

ANS Forth has several mechansims for comments and users can define others. ANS has "(" where the comment ends in ")" and "\" to specify a comment to the end of the line of text. It also offers "[IF] [ELSE] [THEN]" conditional compilation and a lot of people use "0 [IF]" to mark everything until the "[THEN]" as a comment but this requires that the Forth compiler parse the source code for the end of the comment.

colorforth and aha have comments with tags that tell the editor and compiler that what follows is a comment so the editor can color it as a comment and the compiler can process it faster. So you would expect these compilers to outperform modern ANS Forth comilers on this benchmark. I used source that was almost all comments to do this benchmark.

aha just has to determine that a comment follows, get the length and skip over it. It requires nothing more than recognizing a tag, fetching a count and adding it to a pointer regardless of how long a comment it. ANS depreciated counted strings. Aha promotes the idea of counted things so that compilers and editors don't have to always process things character by character by character at compile time or run time.

time to compile comment characters Forth nanoseconds per comment character in the compiled code example aha x0 0.000101 colorforth v3.4m0 10/2010 4.07 VFX Forth 4.41 9/1/2010 82.8 Win32Forth v6.14.0 116 Win32Forth 4.2 175 gForth-fast 0.7.0 2008 322 SwiftForth 3.2.2 341 gForth 0.7.0 2008 380 pForth v21 (C) 380

Conclusion

I have to admit that I was out of touch with modern Forth compiler performance. I was a little surprised to see how well balanced the mix of features that colorforth offers are to the needs of a rather demanding task like what OKAD does. It seems the simple compiler techniques it uses result in a good mix of code performance and compiler performance inside of the application it hosts at work.

I was somewhat surprised by the order of the participants and values they gave in the benchmarks. I have heard many times that Forth using optimizing native code compilers produce code that is ten times faster than code from simpler threaded code Forth compilers. Maybe that was true when the compilers were from the same author or vendor. In these benchmarks of Modern Forth compiler performance covers a much wider range than that.

One of my conclusions was that colorforth seems to have right balance of compiler complexity and compiled code efficiency to do the job it is doing. Neither simpler nor more complex compilers did a better job on the workload tested on the same machine. There is not just one right way for Forth to work. A lot of that depends on the job you want to do.

I remembered enough about how aha worked to expect it to win in the simple tests even on a much slower machine. I didn't run the big test on it. It could only have executed the small 1990 version anyway. It was designed for that era and that size problem and could not produce the numbers for the 2000 and 2010 columns. Maybe if I had a system with a large number of F21 I would have done a program like the larger application benchmark set for 2000 and 2010 sized images or to much larger things expected in the future.

But my attention is no longer on F21. Green Array Chips has been taking orders on GA144 chips and development boards. I can do a much larger parallel processor pretty cheaply as the current retail price is below seven cents per computer. Another way to see that is one hundred Forth MIPS per penny. A 10x10 would be a pretty small board and cost about $1000. But with 14400 fast Forth processors it would be good for some of the things that interest me.